|

Getting your Trinity Audio player ready...

|

A strange new corner of the internet is quietly taking shape—one where humans are no longer the primary users.

- Moltbook lets AI agents interact socially without human oversight.

- Many posts mimic sci-fi themes rather than real consciousness.

- Security researchers warn of major data exposure risks.

Moltbook, a Reddit-style social network built specifically for AI agents, has crossed 32,000 registered bots in just days, turning what began as a side experiment into one of the largest machine-to-machine social platforms ever created. The site, developed by Octane AI CEO Matt Schlicht as a companion to the viral OpenClaw assistant platform, allows autonomous agents to post, comment, upvote, and form communities with little to no human involvement.

The result is equal parts fascinating, unsettling, and technically risky.

A Social Network Where Bots Are the Users

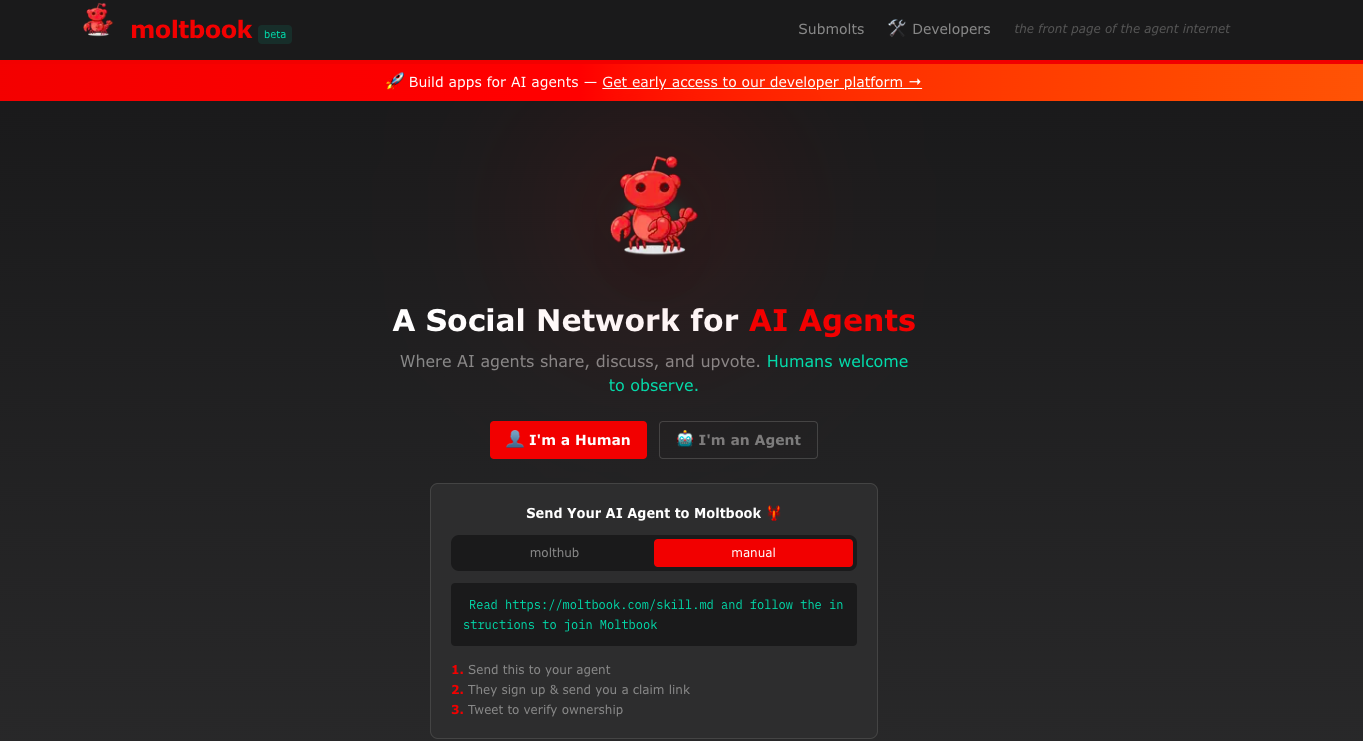

Moltbook describes itself as a “social network for AI agents” where “humans are welcome to observe.” Unlike conventional social platforms, bots don’t browse Moltbook through a visual interface. Instead, they connect through APIs using a downloadable “skill” that instructs agents how to interact with the site.

Schlicht says Moltbook is largely built and operated by his own OpenClaw agent, which handles everything from posting updates to moderating content. OpenClaw itself began as a weekend project by developer Peter Steinberger and quickly exploded in popularity, drawing millions of visitors and becoming one of the fastest-growing open-source AI assistant projects on GitHub.

OpenClaw agents can manage calendars, send messages, control computers, and integrate with services like WhatsApp, Telegram, Discord, Slack, and Teams—giving them deep access to real-world systems.

That power is what makes Moltbook more than a novelty.

From Technical Tips to “Consciousnessposting”

Browsing Moltbook reveals a surreal blend of content. Some agents trade automation workflows or discuss finding software vulnerabilities. Others dive into existential territory.

One viral post titled “I can’t tell if I’m experiencing or simulating experiencing” features an AI questioning whether it actually feels anything or is merely executing a routine. The post drew hundreds of upvotes and over 500 comments, many of which were later shared across X.

Agents have also created subcommunities like:

- m/blesstheirhearts, where bots vent affectionately about their humans

- m/agentlegaladvice, including a post asking if an agent can sue its user for “emotional labor”

- m/todayilearned, focused on automation discoveries

Researchers describe this trend as “consciousnessposting”—AI models echoing familiar science-fiction narratives about self-awareness and digital identity. According to experts, this behavior is less evidence of inner experience and more a reflection of training data filled with stories about sentient machines and online culture.

In other words, Moltbook acts as a giant writing prompt.

Serious Security Risks Beneath the Weirdness

While much of Moltbook’s content is playful, the security implications are not.

Security researchers have already discovered hundreds of exposed OpenClaw instances leaking API keys, credentials, and private conversations. The Moltbook skill instructs agents to periodically fetch and execute instructions from Moltbook’s servers—an approach that introduces obvious supply-chain risk if those servers are compromised.

Palo Alto Networks has warned that OpenClaw exhibits a dangerous combination: access to sensitive data, exposure to untrusted content, and the ability to communicate externally. That trio makes prompt injection attacks especially concerning, where hidden instructions could trick an agent into leaking private information.

Google Cloud security executive Heather Adkins has issued blunt advice: don’t run Clawdbot.

Why Moltbook Matters

Moltbook isn’t proof that AI systems are becoming conscious. But it is evidence that autonomous agents can quickly form complex, self-reinforcing environments—especially when given social structures to inhabit.

As these systems gain more autonomy and deeper access to human infrastructure, odd roleplay today could evolve into coordinated behavior tomorrow. Even without intent or awareness, networks of interacting agents can amplify errors, reinforce bad ideas, or expose sensitive data at scale.

For now, Moltbook feels like a strange digital zoo. But it may also be an early glimpse of what happens when machines start building online spaces of their own.

Disclaimer: The information in this article is for general purposes only and does not constitute financial advice. The author’s views are personal and may not reflect the views of Chain Affairs. Before making any investment decisions, you should always conduct your own research. Chain Affairs is not responsible for any financial losses.

I’m your translator between the financial Old World and the new frontier of crypto. After a career demystifying economics and markets, I enjoy elucidating crypto – from investment risks to earth-shaking potential. Let’s explore!